Atoms

mflag = 2 at \int_{r_i}^{r_c} k_{\ell} (r) dr + \int_{r_c}^{r_{oH}} k_H(r) dr</math></math>! mflag = 2 at \int_{r_{iH}}^{r_{c}} k_H (r) dr + \int_{r_{iH}}^{r_{oH}} k_H(r)! mflag = 2 at dr</math></math>!

Contents

Atoms

Introduction

We could have continued our discussion of two-level systems and resonance and introduced line shapes and various modes of excitation---but first we want to look where the lines come from: from atomic structure. We'll begin by considering the electronic structure in hydrogen and, more generally, atoms with one valence electron, then study the case of helium to understand the effect of adding a second electron. In two subsequent chapters, we will consider the more detailed energy-level structure of hydrogen, including fine structure, the Lamb shift, and effects of the nucleus (hyperfine structure) and of external magnetic and electric fields.

Hydrogen Atom

Bohr's Postulates

We briefly review the Bohr atom-- a model that was soon obsolete, but which nevertheless provided the major impetus for developing quantum mechanics. Balmer's empirical formula of 1885 had reproduced Angstrom's observations of spectral lines in hydrogen to 0.1 \AA accuracy, but it was not until 1913 that Bohr gave an explanation for this based on a quantized mechanical model of the atom. This model involved the postulates of the Bohr Atom: \begin{itemize}

* Electron and proton are point charges whose interaction is

coulombic at all distances.

* Electron moves in circular orbit about the center of mass in

{\it stationary states} with orbital angular momentum . \end{itemize} These two postulates give the energy levels: \\

\begin{itemize}

* One quantum of radiation is emitted when the system changes

between these energy levels.

* The wave number of the radiation is given by the Bohr frequency

criterion\footnote{Note that the wave number is a spectroscopic unit defined as the number of wavelengths per cm, = . It is important not to confuse the wave number with the magnitude of the wave vector which defines a traveling wave of the form exp. The magnitude of the wave vector is times the wave number.}:

\end{itemize} The mechanical spirit of the Bohr atom was extended by Sommerfeld in 1916 using the Wilson-Sommerfeld quantization rule (valid in the JWKB approximation),

where and are conjugate coordinate and momentum pairs for each degree of freedom of the system. This extension yielded elliptical orbits which were found to have an energy nearly degenerate with respect to the orbital angular momentum for a particular principal quantum number . The degeneracy was lifted by a relativistic correction whose splitting was in agreement with the observed fine structure of hydrogen. (This was a great cruel coincidence in physics. The mechanical description ultimately had to be completely abandoned, in spite of the excellent agreement of theory and experiment.) Although triumphant in hydrogen, simple mechanical models of helium or other two-electron atoms failed, and real progress in understanding atoms had to await the development of quantum mechanics.

Solution of Schrodinger Equation: Key Results

As you will recall from introductory quantum mechanics, the Schrodinger equation

can be solved exactly for the Coulomb Hamiltonian

by separation of variables

The full solution is reviewed in Appendix \ref{app:schr}. Here, we will highlight some important results: \begin{itemize} \item, just as in the Bohr model

*

\item \end{itemize} Here, is the Rydberg constant and , where is the effective mass. Note that the relation

follows directly from the Virial Theorem, which states in general that so that for a spherically symmetric potential of the form , we have and for the special case of the Coulomb potential (footnote: In addition to the important case of the Coulomb potential, it is worth remembering the Virial Theorem for the harmonic oscillator, .)

The same factor of from the Virial Theorem appears in the relationship between the Rydberg constant and the atomic unit of energy, the hartree:

We are working here in cgs units because the expressions are more concise in cgs than in SI. To convert to SI, simply make the replacement . \begin{quote} Question: How does the density of an -electron at the origin depend on and ? A. B. C. D. \par The exact result for the density at the origin is (C). To arrive at the scaling, we observe that the and only appear in the Hamiltonian (\ref{eq:coulomb}) in the combination . Thus, any result for hydrogen can be extended to the case of nuclear charge simply by making the substiution . This leads, in turn, to the substitutions

Thus, the density , so the answer can only be A or C. It is tempting to assume that the scaling can be derived from the length scale . However, the characteristic length for the density at the origin is that which appears in the exponential term of the wavefunction ,

Thus,

for all . Remember this scaling, as it will be important for understanding such effects as the quantum defect in atoms with core electrons and hyperfine structure. \end{quote} For the case of , has a node at , but the density \textit{near} the origin is nonetheless of interest. It can be shown (Landau III, \S 36 \cite{Landau}) that, for small ,

Since , the density near the origin scales for large as

Atomic Units

The natural units for describing atomic systems are obtained by setting to unity the three fundamental constants that appear in the hydrogen Hamiltonian (Eq. \ref{eq:coulomb}), . One thus arrives at atomic units, such as \begin{itemize}

* length: Bohr radius =

* energy: 1 hartree = * velocity: * electric field: (footnote: This is the characteristic value for the orbit of hydrogen.} Failed to parse (syntax error): {\displaystyle \frac{e}{a_0^2}=5.142\times 10^9\ \textrm{V/cm) }

\end{itemize} As we see above, we can express the atomic units in terms of instead of by introducing a single dimensionless constant

The \textit{fine structure constant} (footnote: The name "fine structure constant" derives from the appearance of in the ratio of fine structure splitting to the Rydberg.} is the only fundamental constant in atomic physics. As such, it should ultimately be predicted by a complete theory of physics. Whereas precision measurements of other constants are made in atomic physics for purely metrological purposes (see Appendix \ref{app:metrology) ), , as a dimensionless constant, is not defined by metrology. Rather, characterizes the strength of the electromagnetic interaction, as the following example will illustrate. If energy uncertainties become become as large as , the concept of a particle breaks down. This upper bound on the energy uncertainty gives us, via the Heisenberg Uncertainty Principle, a lower bound on the length scale within which an electron can be localized: Even at this short distance of , the Coulumb interaction---while stronger than that in hydrogen at distance ---is only:</math> i.e. in relativistic units the strength of this "stronger" Coulomb interaction is . That says that the Coulomb interaction is weak.

One-Electron Atoms with Cores

\begin{figure}

\caption{One-electron atom with core of net charge Z.}

\end{figure} \begin{quote} Question: Consider now an atom with one valence electron of principle quantum number outside a core (comprising the nucleus and filled shells of electrons) of net charge . Which of the following forms describes the lowest order correction to the hydrogenic energy ? A. B. C. D. Both C and D are correct answers, equivalent to lowest order in . This scaling can be derived via perturbation theory. In particular, we can model the atom with a core of net charge as a hydrogenic atom with nuclear charge ---described by Hamiltonian ---and add a perturbing Hamiltonian to account for the penetration of the valence electron into the core. As we showed in \ref{q:nZ}, . Thus, for the Hamiltonian

where the penetration correction is localized around , the deviation from hydrogenic energy levels is

where the proportionality constant depends on . In particular, we can define the \textit{quantum defect} such that

The energy levels then take the form

\end{quote} This accounts for phenomenological formula for term values in the Balmer-Ritz formula,

This formula, in which the are non-integers but are spaced by integer differences, had been deduced spectroscopically long before it could be understood quantum mechanically. See Appendix \ref{app:qdefect} for a derivation of the quantum defect based on solving the radial Schrodinger equation in the JWKB approximation, which sheds light on the relation of the quantum defect to the phase shift of an electron scattering off a modified Coulomb potential; and for a sample calculation of the quantum defect for a model atom.

Spectroscopic Notation

Neutral atoms consist of a heavy nucleus with charge surrounded by electrons. Positively charged atomic ions generally have structure similar to the atom with the same number of electrons except for a scale factor; negative ions lack the attractive Coulomb interaction at large electron-core separation and hence have few if any bound levels. Thus the essential feature of an atom is its number of electrons, and their mutual arrangement as expressed in the quantum numbers. An isolated atom has two good angular momentum quantum numbers, and . (This is strictly true only for atoms whose nuclei have spin . However, is never significantly destroyed by coupling to in ground state atoms.) In zero external field the atomic Hamiltonian possesses rotational invariance which implies that each level is degenerate with respect to the states with specific (traditional atomic spectroscopists call these states "sublevels"). For each , an atom will typically have a large number of discrete energy levels (plus a continuum) which may be labeled by other quantum numbers. If Russell-Saunders coupling ( coupling) is a good description of the atom (true for light atoms), then and , where

are nearly good quantum numbers and may be used to distinguish the levels. In this case the level is designated by a {\it Term} symbolized where and are written numerically and is designated with this letter code:

| : | O | 1 | 2 | 3 | 4 | ... | ||

| Letter: |

The Letters stand for Sharp, Principal, Diffuse, and Fundamental - adjectives applying to the spectral lines of one electron atoms. When describing a hydrogenic atom, the term is preceded by the principle quantum number of the outermost electron. For example, in sodium, the ground state is designated , while the D-line excited states are . This discussion of the term symbol has been based on an external view of the atom. Alternatively one may have or assume knowledge of the internal structure - the quantum numbers of each electron. These are specified as the {\it configuration}, e.g..

a product of symbols of the form which represents electrons in the orbital , . is the principal quantum number, which characterizes the radial motion and has the largest influence on the energy. and are written numerically, but the ... coding is used for . Returning to the example of sodium, the configuration is , which is often abbrevated to simply . In classifying levels, the term is generally more important that the configuration because it determines the behavior of an atom when it interacts with or fields. Selection rules, for instance, generally deal with . Furthermore the configuration may not be pure - if two configurations give rise to the same term (and have the same parity) then intra-atomic electrostatic interactions can mix them together. This process, called configuration interaction, results in shifts in the level positions and intensities of special lines involving them as well as in correlations in the motions of the electrons within the atoms.

Energy Levels of Helium

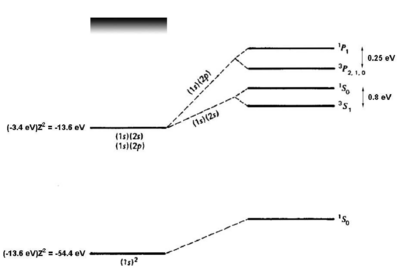

\begin{figure}

\caption{Energy levels of helium. Figure adapted from Gasiorowicz \cite{Gasiorowicz}. The quantum mechanics texts of Gasiorowicz and Cohen-Tannoudji \cite{CohenT} are valuable resources for more detailed treatment of the helium atom.}

\end{figure} If we naively estimate the binding energy of ground-state helium () as twice the binding energy of a a single electron in a hydrogenic atom with , we obtain . Comparing to the experimental result of 79 eV, we see a \textit{big} (39 eV) discrepancy. However, we have neglected the interaction. Introducing a perturbation operator

where is the distance between the two electrons, and still approximating each electron to be in the state---yielding a total wavefunction ---we obtain the first-order correction

which removes most of the discrepancy. A single-parameter variational ansatz using as parameter removes of the remaining discrepancy. Thus, although we cannot solve the helium Hamiltonian exactly, we can understand the ground -state energy well simply by taking into account the shielding of the nuclear charge by the interaction. For the first excited states, there are two possible electronic configurations, and . The electron sees a shielded nucleus ( vs. ) due to the electron, and consequently has a smaller binding energy, comparable to the binding energy for hydrogen ( eV). For the electron, which has no node at the nucleus, the shielding effect is similar but weaker (see figure \ref{fig:he_levels}). Consider now the possible terms for the configuration: \begin{itemize}

* (singlet) * (triplet)

\end{itemize} The singlet and triplet states are, respectively, antisymmetric and symmetric under particle exchange. Since Fermi statistics demand that the total wavefunction of the electrons be antisymmetric, the spatial and spin wavefunctions must have opposite symmetry,

Here, is the wavefunction with symmetric spatial part and antisymmetric spin state , i.e. the spin singlet (S=0, term). is the wavefunction with antisymmetric spatial part and symmetric spin state , i.e. the spin triplet (S=1, term). Now consider the Coulomb energy of the interaction in these excited states:

The first term (called the Coulomb term, although both terms are Coulombic in origin) is precisely what we would calculate classically for the repulsion between two electron clouds of densities and . The second term, the exchange term, is a purely quantum mechanical effect associated with the Pauli exclusion principle. The triplet state has lower energy, because the antisymmetric spatial wavefuntion reduces repulsion interactions. Since the interaction energy is spin-dependent, it can be written in the same form as a ferromagnetic spin-spin interaction,

To see this, we use the relation . Since , eq. \ref{eq:ferro} is equivalent to

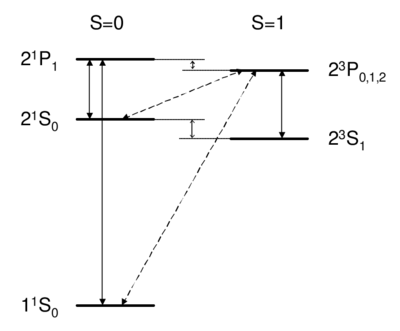

where we have substituted for the singlet and triplet states, respectively. Bear in mind that, although the interaction energy is spin-dependent, the origin of the coupling is electrostatic and not magnetic. Because of its electrostatic origin, the exchange energy (0.8 eV for the splitting, as indicated in figure \ref{fig:he_levels}) is much larger than a magnetic effect such as the fine structure splitting, which carries an extra factor of . \begin{figure}

\caption{Dipole-allowed transitions (solid) and intercombination lines (dashed) in helium.}

\end{figure} \QA{What field (coupling) drives singlet-triplet transitions? A. Optical fields (dipole operator) B. rotating magnetic fields C. Both D. None }{ Singlet-triplet transitions are forbidden by the selection rule, so to first order the answer is D. A is ruled out because the dipole operator acts on the spatial wavefunction, not on the spin part. One might think (B) that a transverse magnetic field, which couples to , , can be used to flip a spin. However, a transverse field can only rotate the two spins together; it cannot rotate one spin relative to the other, as would be necessary to change the magnitude of . Because all spin operators are symmetric against particle exchange, they couple only . Thus, spatial and spin symmetry (S,A) are both good quantum numbers. More formally, if all observables commute with the particle exchange operator , then (S or A) is a good quantum number. Thus, as long as wavefunction and operators separate into spin dependent and space dependent parts , both 's are conserved. Intercombination (i.e. transition between singlet and triplet) is only possible when this assumption is violated, i.e. when the spin and spatial wavefunctions are mixed, e.g. by spin orbit coupling. As we will see in Ch. \ref{ch:fs}, spin orbit coupling is , and hence is weak for helium. Thus, the state of helium is \textit{very} long-lived, with a lifetime of about 8,000 s. (footnote: Historically, the absence of transition between singlet and triplet states led to the belief that there were two kinds of helium, ortho-helium (triplet) and para-helium (singlet).) The other noble gases (Ne, Ar, Kr, Xe) have lifetimes on the order of 40 s. Because they are so narrow, intercombination lines are of great interest for application in optical clocks. While a lifetime of 8000 s is \textit{too} long to be useful (because such a narrow transition is difficult both to find and to drive), intercombination lines in Mg, Ca, or Sr---with linewidths ranging from mHz to kHz---are discussed as potential optical frequency standards \cite{Ludlow2006, Ludlow2008}. } \begin{subappendices}

Appendix: Solution of the Schrodinger Equation

Radial Schrodinger equation for central potentials

Stationary solutions of the time dependent Schrodinger equation

\noindent( is the Hamiltonian operator ) can be represented as

\noindent where stands for all quantum numbers necessary to label the state. This leads to the time-independent Schrodinger equation

A pervasive application of this equation in atomic physics is fo the case of a spherically symmetric one-particle system of mass . In this case the Hamiltonian is </math> </math>

\noindent where the kinetic energy operator has been written in spherical coordinates. Because is spherically symmetric, the angular dependence of the solution is characteristic of spherically symmetric systems in general and may be factored out:

\noindent are the {\it spherical harmonics} and is the eigenvalue of the operator for the orbital angular momentum, ,

and is the eigenvalue of the projection of on the quantization axis (which may be chosen at will)

With this substitution Eq.\ \ref{EQ_secpfive} the time independent radial Schrodinger equation becomes

This is the equation which is customarily solved for the hydrogen atom's radial wave functions. For most applications (atoms, scattering by a central potential, diatomic molecules) it is more convenient to make a further substitution.

which leads to

with the boundary condition . This equation is identical with the time independent Schrodinger equation for a particle of mass in an effective one dimensional potential,

The term is called the {\it centrifugal potential}, and adds to the actual potential the kinetic energy of the circular motion that must be present to conserve angular momentum.

Radial equation for hydrogen

The quantum treatment of hydrogenic atoms or ions appears in

many textbooks and we present only a summary\footnote{The most comprehensive

treatment of hydrogen is the classic text of Bethe and Salpeter, { \it The Quantum Mechanics of One- and Two-Electron Atoms}, H. A. Bethe and E. E. Salpeter, Academic Press (1957). Messiah is also excellent.}. For hydrogen Eq.\ \ref{EQ_secpten} becomes

\noindent First look at this as , the dominant terms are

for any value of . It is easily verified that the two independent solutions are

For the only normalizable solution is . Question: What happens to these arguments for ? What implications does this have for the final solution? Messiah has a good discussion of this. We look next at the solution for where we may investigate a simpler equation if . For large r:

If , this equation has oscillating solutions corresponding to a free particle. For the equation has exponential solutions, but only the decaying exponential is physically acceptable (i.e.. normalizable)

When , it is possible to obtain physically reasonable solutions to Eq.\ \ref{EQ_secpten} (or indeed {\it any} bound state problem) only for certain discrete values of , its eigenvalues. This situation arises from the requirement that the radial solution be normalizable, which requires that , or alternatively, that vanishes sufficiently rapidly at large r.). Eq.\ref{EQ_rehone} is a prescription for generating a function for arbitrary given and at any point. This can be solved exactly as hydrogen. For other central potentials, one can find the eigenvalues and eigenstates by computation. One proceeds as follows: Select a trial eigenvalue, . Starting at large a "solution" of the form of Eq.\ \ref{EQ_rehfive} is selected and extended in to some intermediate value of . At the origin one must select the solution of the form Eq.\ (\ref{EQ_rehthree}); this "solution" is then extended out to . The two "solutions" may be made to have the same value at by multiplying one by a constant; however, the resulting function is a valid solution only if the first derivative is continuous at , and this occurs only for a discrete set of . The procedure described here is, in fact, the standard Numerov-Cooley algorithm for finding bound states. Its most elegant feature is a procedure for adjusting the trial eigenvalue using the discontinuity in the derivative that converges to the correct energy very rapidly. For the hydrogen atom, the eigenvalues can be determined analytically. The substitution

leads to a particularly simple equation. To make it dimensionless, one changes the variable from to

so the exponential in Eq.\ \ref{EQ_rehsix} becomes , and defines

where is the Bohr radius:

This is a Laplace equation and its solution is a confluent hypergeometric series. To find the eigenvalues one now tries a Taylor series

for . This satisfies the equation only if the coefficients of each power of x are satisfied, i.e..\

The first line fixes , the second then determines , and in general

In general Eq.\ \ref{EQ_reheleven} will give a coefficient on the order of 1/! so

this spells disaster because it means diverges. The only way in which this can be avoided is if the series truncates, i.e. if v is an integer:

so that , will be zero and will have nodes. Since , it is clear that you must look at energy level to find a state with angular momentum (e.g.. the 2 configuration does not exist). This gives the eigenvalues of hydrogen (from Eq.\ \ref{EQ_reheight})

which agrees with the Bohr formula.

Appendix: More on the Quantum Defect

Explanation: JWKB Approach

It must always be kept in mind that the quantum defect is a phenomenological result. To explain how such a simple result arises is obviously an interesting challenge, but it is not to be expected that the solution of this problem will lead to great new physical insight. The only new results obtained from understanding quantum defects in one electron systems are the connection between the quantum defect and the electron scattering length for the same system (Mott and Massey section III 6.2) which may be used to predict low energy electron scattering cross sections (ibid. XVII 9&10), and the simple expressions relating to the polarizability of the core for larger \cite{Freeman1976}. The principal use of the quantum defect is to predict the positions of higher terms in a series for which is known. Explanations of the quantum defect range from the elaborate fully quantal explanation of Seaton \cite{Seaton1966} to the extremely simple treatment of Parsons and Weisskopf \cite{Parsons1967}, who assume that the electron can not penetrate inside the core at all, but use the boundary condition which requires relabeling the lowest state 1s since it has no nodes outside the core. This viewpoint has a lot of merit because the exclusion principle and the large kinetic energy of the electron inside the core combine to reduce the amount of time it spends in the core. This is reflected in the true wave function which has nodes in the core and therefore never has a chance to reach a large amplitude in this region. To show the physics without much math (or rigor) we turn to the JWKB solution to the radial Schrodinger Equation (see Messiah Ch.\ VI). Defining the wave number

then the phase accumulated in the classically allowed region is

where and are the inner and outer turning points. Bound state eigenvalues are found by setting

(The comes from the connection formulae and would be 1/4 for = 0 state where . Fortunately it cancels out.) To evaluate for hydrogen use the Bohr formula for ,

In the spirit of the JWKB approximation, we regard the phase as a continuous function of . Now consider a one-electron atom with a core of inner shell electrons that lies entirely within . Since it has a hydrogenic potential outside of , its phase can be written (where are the outer and inner turning points for hydrogen at energy ): </math> </math> The final integral is the phase for hydrogen at some energy , and can be written as . Designating the sum of the first two integrals by the phase , then we have

\noindent or

Hence, we can relate the quantum defect to a phase:

\noindent since it is clear from Eq.\ \ref{EQ_expone} and the fact that the turning point is determined by that approaches a constant as . Now we can find the bound state energies for the atom with a core; starting with Eq.\ \ref{EQ_expthree}, </math> </math>

thus we have explained the Balmer-Ritz formula (Eq.\ \ref{EQ_phenthree}). If we look at the radial Schrodinger equation for the electron ion core system in the region where we are dealing with the scattering of an electron by a modified Coulomb potential (Mott & Massey Chapter 3). Intuitively one would expect that there would be an intimate connection between the bound state eigenvalue problem described earlier in this chapter and this scattering problem, especially in the limit (from above and below). Since the quantum defects characterize the bound state problem accurately in this limit one would expect that they should be directly related to the scattering phase shifts ( is the momentum of the {\it free} particle) which obey

This has great intuitive appeal: as discussed above is precisely the phase shift of the wave function with the core present relative to the one with V = . On second thought Eq.\ \ref{EQ_expeight} might appear puzzling since the scattering phase shift is customarily defined as the shift relative to the one with . The resolution of this paradox lies in the long range nature of the Coulomb interaction; it forces one to redefine the scattering phase shift, , to be the shift relative to a pure Coulomb potential.

Quantum defects for a model atom

Now we give a calculation of a quantum defect for a potential which is not physically realistic, but has only the virtue that it is easily solvable. The idea is to put an extra term in the potential which goes as so that the radial Schrodinger equation (Eq.\ \ref{EQ_secpten}) can be solved simply by adjusting . The electrostatic potential corresponds to having all the core electrons in a small cloud of size (which is a nuclear size) which decays as an inverse power of . </math> </math> </math>

At , . We presume is the nuclear size and pretend that it is so small we don't have to worry about what happens inside it. When the potential V (r) = is substituted in Eq.\ \ref{EQ_rehone}, one has

If one now defines

then since , and one can write

Substituting Eq.\ \ref{EQ_qdancfour} in Eq.\ \ref{EQ_qdanctwo} gives the radial Schrodinger equation for hydrogen, (Eq.\ref{EQ_secpten}), except that replaces ; eigenvalues occur when (see Eq.\ \ref{EQ_rehfourteen})

where is an integer. Using as before, we obtain the corresponding eigenvalues as

The quantum defect is independent of

Eq.\ \ref{EQ_qdancfour} may be solved for using the standard quadratic form. Retaining the solution which as , gives

This shows that as . In contrast to the predictions of the above simple model, quantum defects for realistic core potentials decrease much more rapidly with increasing [for example as ( and generally exhibit close to zero for all greater than the largest value occupied by electrons in the core.

Metrology and Precision Measurement and Units

As scientists we take the normal human desire to understand the world to quantitative extremes. We demand agreement of theory and experiment to the greatest accuracy possible. We measure quantities way beyond the current level of theoretical understanding in the hope that this measurement will be valuable as a reference point or that the difference between our value and some other nearly equal or simply related quantity will be important. The science of measurement, called metrology, is indispensable to this endeavor because the accuracy of measurement limits the accuracy of experiments and their intercomparison. In fact, the construction, intercomparison, and maintenance of a system of units is really an art (with some, a passion), often dependent on the latest advances in the art of physics (e.g.. quantized Hall effect, cold atoms, trapped particle frequency standards). As a result of this passion, metrological precision typically marches forward a good fraction of an order of magnitude per decade. Importantly, measurements of the same quantity (e.g. or ) in different fields of physics (e.g.. atomic structure, QED, and solid state) provide one of the few cross-disciplinary checks available in a world of increasing specialization. Precise null experiments frequently rule out alternative theories, or set limits on present ones. Examples include tests of local Lorentz invariance, and the equivalence principle, searches for atomic lines forbidden by the exclusion principle, searches for electric dipole moments (which violate time reversal invariance), and the recent searches for a "fifth [gravitational] force". A big payoff, often involving new physics, sometimes comes from attempts to achieve routine progress. In the past, activities like further splitting of the line, increased precision and trying to understand residual noise have lead to the fine and hyperfine structure of , anomalous Zeeman effect, Lamb shift, and the discovery of the 3K background radiation. One hopes that the future will bring similar surprises. Thus, we see that precision experiments, especially involving fundamental constants or metrology not only solidify the foundations of physical measurement and theories, but occasionally open new frontiers.

Dimensions and Dimensional Analysis

Oldtimers were brought up on the mks system - meter, kilogram, and second. This simple designation emphasized an important fact: three dimensionally independent units are sufficient to span the space of all physical quantities. The dimensions are respectively - length, -mass, and -time. These three dimensions suffice because when a new physical quantity is discovered (e.g.. charge, force) it always obeys an equation which permits its definition in terms of m,k, and s. Some might argue that fewer dimensions are necessary (e.g.. that time and distance are the same physical quantity since they transform into each other in moving reference frames); we'll keep them both, noting that the definition of length is now based on the speed of light. Practical systems of units have additional units beyond those for the three dimensions, and often additional "as defined" units for the same dimensional quantity in special regimes (e.g.. x-ray wavelengths or atomic masses). Dimensional analysis consists simply in determining for each quantity its dimension along the three dimensions (seven if you use the SI system rigorously) of the form

Dimensional analysis yields an estimate for a given unknown quantity by combining the known quantities so that the dimension of the combination equals the dimension of the desired unknown. The art of dimensional analysis consists in knowing whether the wavelength or height of the water wave (both with dimension ) is the length to be combined with the density of water and the local gravitational acceleration to predict the speed of the wave.

SI units

A single measurement of a physical quantity, by itself, never provides information about the physical world, but only about the size of the apparatus or the units used. In order for a single measurement to be significant, some other experiment or experiments must have been done to measure these "calibration" quantities. Often these have been done at an accuracy far exceeding our single measurement so we don't have to think twice about them. For example, if we measure the frequency of a rotational transition in a molecule to six digits, we have hardly to worry about the calibration of the frequency generator if it is a high accuracy model that is good to nine digits. And if we are concerned we can calibrate it with an accuracy of several more orders of magnitude against station WWV or GPS satellites which give time valid to 20 ns or so. Time/frequency is currently the most accurately measurable physical quantity and it is relatively easy to measure to . In the SI (System International, the agreed-upon systems of weights and measures) the second is defined as 9,192,631,770 periods of the Cs hyperfine oscillation in zero magnetic field. Superb Cs beam machines at places like NIST-Boulder provide a realization of this definition at about . Frequency standards based on laser-cooled atoms and ions have the potential to do several orders of magnitude better owing to the longer possible measurement times and the reduction of Doppler frequency shifts. To give you an idea of the challenges inherent in reaching this level of precision, if you connect a 10 meter coaxial cable to a frequency source good at the level, the frequency coming out the far end in a typical lab will be an order of magnitude less stable - can you figure out why? The meter was defined at the first General Conference on Weights and Measures in 1889 as the distance between two scratches on a platinum-iridium bar when it was at a particular temperature (and pressure). Later it was defined more democratically, reliably, and reproducibly in terms of the wavelength of a certain orange krypton line. Most recently it has been defined as the distance light travels in 1/299,792,458 of a second. This effectively defines the speed of light, but highlights the distinction between defining and realizing a particular unit. Must you set up a speed of light experiment any time you want to measure length? No: just measure it in terms of the wavelength of a He-Ne laser stabilized on a particular hfs component of a particular methane line within its tuning range; the frequency of this line has been measured to about a part in and it may seem that your problem is solved. Unfortunately the reproducibility of the locked frequency and problems with diffraction in your measurement both limit length measurements at about . A list of spectral lines whose frequency is known to better than is given in Appendix II of the NIST special publication

- 330, available of the www at\footnote{

http://physics.nist.gov/Pubs/SP330/cover.html} - see both publications and reference data. The latest revision of the fundamental constants CODATA is available from NIST at http://physics.nist.gov/constants , and a previous version is published in Reference \cite{codata2002}. The third basic unit of the SI system is kilogram, the only fundamental SI base unit still defined in terms of an artifact - in this case a platinum-iridium cylinder kept in clean air at the Bureau de Poids et Measures in Severes, France. The dangers of mass change due to cleaning, contamination, handling, or accident are so perilous that this cylinder has been compared with the dozen secondary standards that reside in the various national measurement laboratories only two times in the last century. Clearly one of the major challenges for metrology is replacement of the artifact kilogram with an atomic definition. This could be done analogously with the definition of length by defining Avogadro's number. While atomic mass can be measured to , there is currently no sufficiently accurate method of realizing this definition, however. (The unit of atomic mass, designated by is 1\\12 the mass of a C atom.) There are four more base units in the SI system - the ampere, kelvin, mole, and the candela - for a total of seven. While three are sufficient (or more than sufficient) to do physics, the other four reflect the current situation that electrical quantities, atomic mass, temperature, and luminous intensity can be and are regularly measured with respect to auxiliary standards at levels of accuracy greater than that with which can be expressed in terms of the above three base units. Thus measurements of Avogadro's constant, the Boltzmann constant, or the mechanical equivalents of electrical units play a role of interrelating the base units of mole (number of atoms of C in 0.012 kg of C), kelvin, or the new de facto volt and ohm (defined in terms of Josephson and quantized Hall effects respectively). In fact independent measurement systems exist for other quantities such as x-ray wavelength (using diffraction from calcite or other standard crystals), but these other de facto measurement scales are not formally sanctioned by the SI system.

Metrology

The preceding discussion gives a rough idea of the definitions and realizations of SI units, and some of the problems that arise in trying to define a unit for some physics quantity (e.g.. mass) that will work across many orders of magnitudes. However, it sidesteps questions of the border between metrology and precision measurements. (Here we have used the phrase "precision measurement" colloquially to indicate an accurate absolute measurement; if we were verbally precise, precision would imply only excellent relative accuracy.) It is clear that if we perform an experiment to measure Boltzmann's constant we are not learning any fundamental physics; we are just measuring the ratio of energy scales defined by our arbitrary definitions of the first three base units on the one hand and the thermal energy of the triple point of water on the other. This is clearly a metrological experiment. Similarly, measuring the hfs frequency of Cs would be a metrological experiment in that it would only determine the length of the second. If we measure the hfs frequency of with high accuracy, this might seem like a physics experiment since this frequency can be predicted theoretically. Unfortunately theory runs out of gas at about due to lack of accurate knowledge about the structure of the proton (which causes a 42 ppm shift). Any digits past this are just data collection until one gets to the 14th, at which point one becomes able to use a maser as a secondary time standard. This has stability advantages over Cs beams for time periods ranging from seconds to days and so might be metrologically useful -- in fact, it is widely used in very long baseline radio astronomy. One might ask "Why use arbitrarily defined base units when Nature has given us quantized quantities already?" Angular momentum and charge are quantized in simple multiples of and , and mass is quantized also although not so simply. We then might define these as the three main base units - who says we have to use mass, length and time? Unfortunately the measurements of , and (which may be thought of as the mass quantum in grams) are only accurate at about the level, well below the accuracy of the realization of the current base units of SI. The preceding discussion seems to imply that measurements of fundamental constants like , and are merely determinations of the size of SI units in terms of the quanta of Nature. In reality, the actual state of affairs in the field of fundamental constants is much more complicated. The complication arises because there are not single accurate experiments that determine these quantities - real experiments generally determine some combination of these, perhaps with some other calibration type variables thrown in (e.g.. the lattice spacing of Si crystals). To illustrate this, consider measurement of the Rydberg constant,

a quantity which is an inverse length (an energy divided by ), closely related to the number of wavelengths per cm of light emitted by a atom (the units are often given in spectroscopists' units, , to the dismay of SI purists). Clearly such a measurement determines a linear combination of the desired fundamental quantities. Too bad, because it has recently been measured by several labs with results that agree to . (In fact, the quantity that is measured is the Rydberg frequency for hydrogen, , since there is no way to measure wavelength to such precision.) This example illustrates a fact of life of precision experiments: with care you can trust the latest adjustment of the fundamental constants and the metrological realizations of physical quantities at accuracies to ; beyond that the limit on your measurement may well be partly metrological. In that case, what you measure is not in general clearly related to one single fundamental constant or metrological quantity. The results of your experiment will then be incorporated into the least squares adjustment of the fundamental constants, and the importance of your experiment is determined by the size of its error bar relative to the uncertainty of all other knowledge about the particular linear combination of fundamental and metrological variables that you have measured. \end{subappendices}

![{\displaystyle \langle r_{n\ell }\rangle ={\frac {n^{2}a_{0}}{Z}}\{1+{\frac {1}{2}}[1-{\frac {\ell (\ell +1)}{n^{2}}}]\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/20007b307fe177b643a0543125ed8e7e90ff24e1)

![{\displaystyle {\begin{aligned}\Delta E&={\frac {1}{2}}\int \int {\frac {e^{2}}{r_{12}}}[|\psi _{100}(r_{1})|^{2}|\psi _{200}(r_{2})|^{2}+(r_{1}\leftrightarrow r_{2})]\\&\pm {\frac {1}{2}}\int \int {\frac {e^{2}}{r_{12}}}[\psi _{100}^{*}(r_{1})\psi _{200}^{*}(r_{2})\psi _{100}(r_{2})\psi _{200}(r_{1})+(r_{1}\leftrightarrow r_{2})]\\&=\Delta E^{Coul}\pm \Delta E^{exch}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4f7da0a65ae741e23636787881de55fc50acbe4a)

![{\displaystyle [P_{ij},{\hat {O}}]=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d769b158d5a631785fbe3e4a49023eeb2ea0cabd)

![{\displaystyle [H({\bf {r}})-E_{n}]\psi _{n}({\bf {{r})=0}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2203017f2e2246ba78bf58782a2a8ed897107924)

![{\displaystyle =-{\frac {\hbar ^{2}}{2\mu }}{\left[{\frac {1}{r^{2}}}{\frac {\partial }{\partial r}}{\left(r^{2}{\frac {\partial }{\partial r}}\right)}+{\frac {1}{r^{2}\sin \theta }}{\frac {\partial }{\partial \theta }}{\left(\sin \theta {\frac {\partial }{\partial \theta }}\right)}+{\frac {1}{r^{2}\sin ^{2}\theta }}{\frac {\partial ^{2}}{\partial \phi ^{2}}}\right]}+V(r)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/80b71a6cc54eac04829756cc17c5105dae5d273a)

![{\displaystyle {\frac {1}{r^{2}}}{\frac {d}{dr}}{\left(r^{2}{\frac {dR_{n\ell }}{dr}}\right)}+{\left[{\frac {2\mu }{\hbar ^{2}}}{\left[E_{n\ell }-V(r)\right]}-{\frac {\ell (\ell +1)}{r^{2}}}\right]}R_{n\ell }=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/969ca6bedf92900bda3f076237a40179b01c1759)

![{\displaystyle {\frac {d^{2}y_{n\ell }(r)}{dr^{2}}}+{\left[{\frac {2\mu }{\hbar ^{2}}}[E_{n\ell }-V(r)]-{\frac {\ell (\ell +1)}{r^{2}}}\right]}y_{n\ell }(r)=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/279fd229ad119851a0d0f02db2990bb8c6edc661)

![{\displaystyle {\frac {d^{2}y_{n\ell }(r)}{dr^{2}}}+{\left[{\frac {2\mu }{\hbar ^{2}}}\left[E_{n}+{\frac {e^{2}}{r}}\right]-{\frac {\ell (\ell +1)}{r^{2}}}\right]}y_{n\ell }(r)=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/66ee354606ea1ea840f353e32f914d91d1ec6f9d)

![{\displaystyle x=[2(-2\mu E)^{1/2}/\hbar ]r}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9dd35bf514a08ac0f1d072604df1b1e3f67f1093)

![{\displaystyle {\left[x{\frac {d^{2}}{dx^{2}}}+(2\ell +2-x){\frac {d}{dx}}-(\ell +1-v)\right]}v_{\ell }=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/337ad02367cc7f4645e0e211a4e0df9cb1f7e998)

![{\displaystyle k_{\ell }(r)={\sqrt {2m[E-V_{\rm {eff}}(r)]\hbar }}~~({\rm {remember}}~V_{\rm {eff}}~~{\rm {depends~on}}~\ell )}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cc46d9f3b823df8c188a91414168ffb8fceae34f)

![{\displaystyle [\phi _{\ell }(E)-\pi /2]=n\pi }](https://wikimedia.org/api/rest_v1/media/math/render/svg/5a8c494c984f0205ad9612fabd47dd2e7cd9654e)

![{\displaystyle \delta _{\ell }=\left[\int _{r_{i}}^{r_{c}}k(r)dr-\int _{r_{iH}}^{r_{c}}k_{H}(r)dr\right]{\frac {1}{\pi }}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/69e65d0c56e2c4af8ecb72c2af97a693d8faa530)

![{\displaystyle \Rightarrow E=hcR_{H}/[n-\delta _{L}(E)]^{2}\equiv {\frac {hcR_{H}}{[n-\delta _{\ell }^{(0)}+\delta _{\ell }^{(1)}hcR_{H}/n^{2}]^{2}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d1c75d2d15282555af5024eea0f3e1324d6b481e)

![{\displaystyle \lim _{k\rightarrow 0}\cot[\sigma _{\ell }(k)]=\pi \delta _{\ell }^{o}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/41eac6670fcbaf0775688f244b79d215db47f95c)

![{\displaystyle {\frac {d^{2}y}{dr^{2}}}+{\left[{\frac {2\mu }{\hbar ^{2}}}{\left[E_{n}+{\frac {e^{2}}{r}}\right]}-{\frac {\ell (\ell +1)-A}{r^{2}}}\right]}y=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b73cb0298fc57100189b50a43ca1f6b5377be889)

![{\displaystyle \ell +1/2)^{-3}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d8b77b349377a7a025c861e2091782d4f1dcebfd)

![{\displaystyle {\text{Dimension}}(G)\equiv [G]=m^{-1}l^{3}t^{-2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a92d6a4db8283093d70a9440c99257ff670e73a9)